| Tutorial >> Filters, Queues and Bandwidth |

|

Using iperf to generate TCP traffic is not much different than than for generating UDP traffic except that the receiver's maximum window size can have a significant impact in the throughput. The default server (receiver) window size is 64 KB or about 42 fullsize TCP segments. If we insert a 100 msec delay along a TCP flow's path (discussed later), a 64 KB receiver buffer will limit the throughput to about 5 Mbps even if the link rates are 600 Mbps. All of the ONL hosts allow receiver windows to be up to 20 MB if the user so chooses.

#!/bin/sh # Usage: run-tservers # Example: ssh onl.arl; run-tservers # Note: Clients (Servers) are NSP2 (NSP1) hosts # source /users/onl/.topology # define env vars $n1p2, ... ssh $n1p2 /usr/local/bin/iperf -s -w 4M & ssh $n1p3 /usr/local/bin/iperf -s -w 4M & ssh $n1p4 /usr/local/bin/iperf -s -w 4M &

#!/bin/sh

# Usage: run-tclients

# Example: run-tclients

# Note: Clients (Servers) are NSP2 (NSP1) hosts

#

source /users/onl/.topology # define env vars $n2p2, ...

while true; do

ssh $n2p2 /usr/local/bin/iperf -c n1p2 -t 30 &

sleep 10

ssh $n2p3 /usr/local/bin/iperf -c n1p3 -t 30 &

sleep 10

ssh $n2p4 /usr/local/bin/iperf -c n1p4 -t 30 &

sleep 40

done

Fig. 1 and 2 show two scripts: a TCP iperf server script, and a TCP iperf client script. These two scripts are typically run on the ONL login host to remotely start the iperf TCP clients and servers and are similar to the corresponding iperf UDP scripts described in Generating Traffic With Iperf. The two main differences are that the client script here continuously loops, and both scripts use TCP-specific parameters.

The run-tservers script launches three servers in the background, all with a receiver window size of 4 MB allowing over 13,000 fullsize (1,500-byte) TCP segments to be intransit. The command 'run-tservers' will launch iperf servers running in the background on ONL hosts $n1p2, $n1p3, and $n1p4. Note that we use the host interface names on the control network and not the internal network.

The run-tclients script launches three remote clients but with start times that are staggered by 10 seconds to give an offset to the traffic charts. The command 'run-tclients' will launch three iperf clients in the background. The -c command-line argument indicates where the server is running and uses the internal network interface name (e.g., n1p2, n1p3, n1p4). The -w option that defines the receiver window size is unnecessary in this example since we are using bulk transfer only to the server (i.e., there is no bulk transfer in the reverse path). The final flag -t specifies the length of time (seconds) that the client should send traffic. The sleep 40 command at the end of the while loop is used to allow all of the flows to finish before having the iperf clients repeat the whole demonstration again.

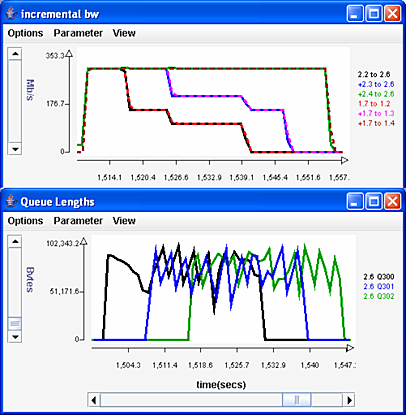

Fig. 3 shows the traffic and queue length charts in the two-NSP configuration we used before but with the three UDP flows replaced by TCP flows. In that configuration, GM filters placed the three flows into reserved flow queues 300-302 which were assigned equal bandwidth shares (quantum). As before with UDP flows, we see in the traffic chart that the three flows receive equal bandwidth out of egress port 2.6. But now, we see the familiar sawtooth shape in the queue length charts due to TCP congestion control.

Revised: Mon, July 31, 2006

| Tutorial >> Filters, Queues and Bandwidth |

|